|

People | Publications | Research | Robots | Join |

|

|

|

|

|

|

|

Humanoid robots offer two unparalleled advantages in general-purpose embodied intelligence. First, humanoids are built as generalist robots that can potentially do all the tasks humans can do in complex environments. Second, the embodiment alignment between humans and humanoids allows for the seamless integration of human cognitive skills with versatile humanoid capabilities, making humanoids the most promising physical embodiment for AI. Humanoid control in a whole-body manner is challenging, due to its high degrees of freedom and contact-rich nature (see this survey). We aim to develop learning-based whole-body control methods for humanoid locomotion and loco-manipulation problems, enabling humanoids to interact with the physical world, adapt quickly to many tasks and environments, and integrate with high-level decision-making layers such as VLMs. |

|

Tairan He*, Zhengyi Luo*, Xialin He*, Wenli Xiao, Chong Zhang, Weinan Zhang, Kris Kitani, Changliu Liu, Guanya Shi Conference on Robot Learning (CoRL), 2024 paper website dataset code IEEE Spectrum TL;DR: OmniH2O provides a universal whole-body humanoid control interface that enables diverse teleoperation and autonomy methods. |

|

Chong Zhang*, Wenli Xiao*, Tairan He, Guanya Shi Conference on Robot Learning (CoRL), 2024 (Oral Presentation) paper website IEEE SpectrumTL;DR: WoCoCo is a task-agnostic skill learning framework without any motion priors, by decomposing long-horizon tasks into contact sequences. |

|

Yuanhang Zhang, Yifu Yuan, Prajwal Gurunath, Tairan He, Shayegan Omidshafiei, Ali-akbar Agha-mohammadi, Marcell Vazquez-Chanlatte, Liam Pedersen, Guanya Shi paper website code TL;DR: FALCON enables various heavy-duty humanoid loco-manipulation tasks via a new dual-agent force-adaptive RL framework. |

|

|

|

|

Offline learned policies for robotic control have shown great success in the robot learning community. For example, learning manipulation policies from demonstrations using imitation learning with generative models; learning locomotion policies in simulation using sim2real reinforcement learning. However, those policies are frozen in test time, and cannot efficiently adapt to new environments or tasks. We aim to develop methods that can effectively learn "adaptable" representation from offline data and efficiently adapt in real time. |

|

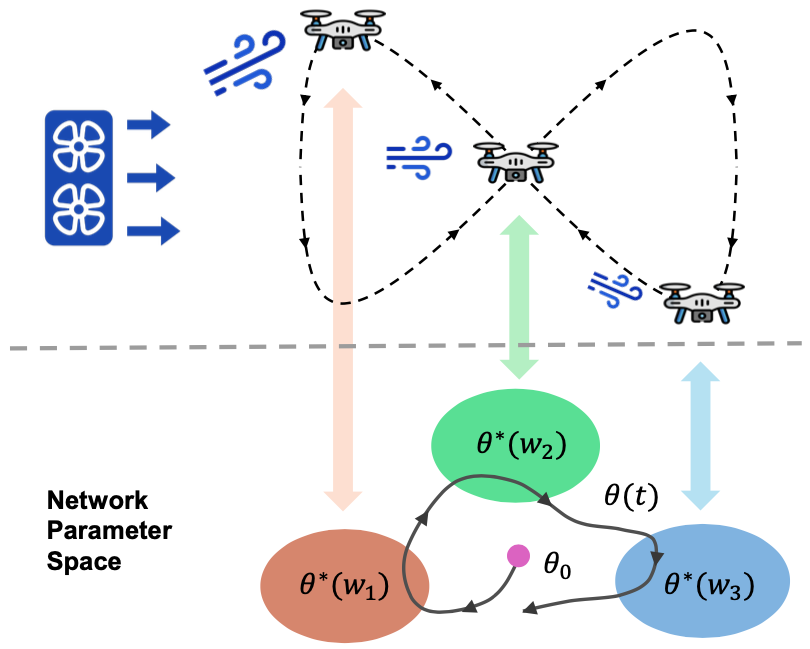

Michael O'Connell*, Guanya Shi*, Xichen Shi, Kamyar Azizzadenesheli, Animashree Anandkumar, Yisong Yue, Soon-Jo Chung Science Robotics paper video Caltech news Reuters CNN code TL;DR: Neural-Fly uses adaptive control to online fine-tune a meta-pretrained DNN representation, enabling rapid adaptation in strong winds. |

|

Kevin Huang, Rwik Rana, Alexander Spitzer, Guanya Shi, Byron Boots Conference on Robot Learning (CoRL), 2023 (Oral presentation, 6.6%) paper website codeTL;DR: DATT can precisely track arbitrary, potentially infeasible trajectories in the presence of large disturbances. |

|

Wenli Xiao*, Haoru Xue*, Tony Tao, Dvij Kalaria, John M. Dolan, Guanya Shi Intertional Conference on Robotics and Automation (ICRA), 2025 paper website code IEEE Spectrum TL;DR: AnyCar is a transformer-based dynamics model that can adapt to various vehicles, environments, state estimators, and tasks. |

|

|

|

|

Sim2real Reinforcement Learning (RL) has become the dominant method for many locomotion problems, especially for humanoids, legged robots, drones, and ground vehicles. However, the large sim2real gap, tedious reward tuning, and the lack of diversity hinder its applications in other problems such as loco-manipulation and dexterous manipulation. We aim to address these limitations via enhancing the simulation training environment using real-world data, which is often referred to as real2sim2real. |

|

Tairan He*, Jiawei Gao*, Wenli Xiao*, Yuanhang Zhang*, Zi Wang, Jiashun Wang, Zhengyi Luo, Guanqi He, Nikhil Sobanbab, Chaoyi Pan, Zeji Yi, Guannan Qu, Kris Kitani, Jessica Hodgins, Linxi "Jim" Fan, Yuke Zhu, Changliu Liu, Guanya Shi paper website code TL;DR: ASAP learns agile whole-body humanoid motions via learning a residual action model from the real world to align sim and real physics. |

|

Nikhil Sobanbabu, Guanqi He, Tairan He, Yuxiang Yang, Guanya Shi paper website code TL;DR: SPI-Active is a general system ID tool based on parallel sampling-based optimization and active exploration, for legged sim2real learning. |

|

|

|

|

Model-based RL (MBRL) first learns a dynamics model (or a "world" model) and then generates a policy via planning/optimization, policy learning, or search. The most compelling part of MBRL is that the learned model is task-agnostic. We are interested in all aspects of MBRL, including model learning, theoretical framework, and planning using optimal control. In particular, recently, we have developed new sampling-based optimal control frameworks that enable efficient online decision-making. |

|

Haoru Xue*, Chaoyi Pan*, Zeji Yi, Guannan Qu, Guanya Shi Intertional Conference on Robotics and Automation (ICRA), 2025 paper website code TL;DR: DIAL-MPC is the first training-free method achieving real-time whole-body torque control using full-order dynamics. |

|

Haotian Lin, Pengcheng Wang, Jeff Schneider, Guanya Shi paper website code TL;DR: We observe the value overestimation issue in planner-based MBRL and propose a policy constraint solution with SOTA performance. |

|

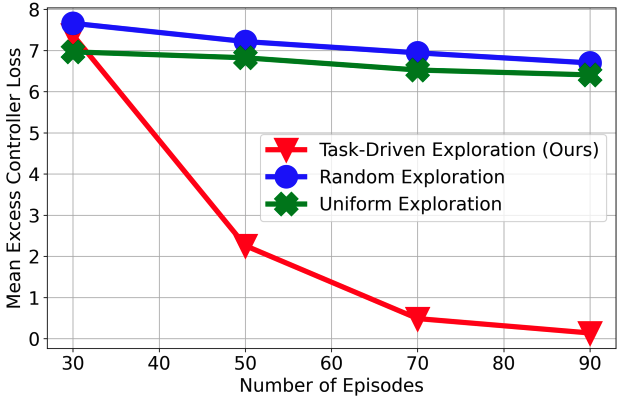

Andrew Wagenmaker, Guanya Shi, Kevin Jamieson Neural Information Processing Systems (NeurIPS), 2023 (Spotlight, 3.1%) paperTL;DR: Not all model parameters are equally important. We develop an instance-optimal exploration algorithm for MBRL in nonlinear systems. |

|

|

|

|

Compared to learning-based methods, one problem of traditional control methods is that their performance cannot effectively scale up with the amount of data and parallel computing. Our focus is around a new concept called computational control, i.e., control methods that can effectively scale up with the amount of data and parallel computing. Examples include sampling-based optimal control and "generative action model" (policy learning using generative models). The goal is to systematically understand, analyze, and enhance computational control methods and apply them to robotics. One of recent interests is to understand how diffusion/flow-based methods work in decision-making problems. |

|

Chaoyi Pan*, Zeji Yi*, Guanya Shi†, Guannan Qu† Neural Information Processing Systems (NeurIPS), 2024 paper website code TL;DR: MBD is a diffusion-based traj optimization method that directly computes the score function using models without any external data. |

|

|

|

|

To some extent, existing works on aerial manipulation primarily focus on the aerial perspective, rather than the general-purpose manipulation perspective. The goal is to study aerial manipulation from the embodied intelligence perspective, building general hardware platforms and control methods. Similar to humanoids, we aim to solve the whole-body control problem for aerial manipulation. We are also interested in designing the high-level policy via vision-language-action (VLA) models or imitation learning. |

|

Guanqi He*, Xiaofeng Guo*, Luyi Tang, Yuanhang Zhang, Mohammadreza Mousaei, Jiahe Xu, Junyi Geng, Sebastian Scherer, Guanya Shi Robotics: Science and Systems (RSS), 2025 paper website TL;DR: A general-purpose aerial manipulation framework with an EE-centric interface that bridges whole-body control and policy learning. |

|

Xiaofeng Guo*, Guanqi He*, Jiahe Xu, Mohammadreza Mousaei, Junyi Geng, Sebastian Scherer, Guanya Shi IEEE Robotics and Automation Letters (RA-L), 2024 paper website IEEE Spectrum TL;DR: Flying calligrapher enables precise hybrid motion and contact force control for an aerial manipulator in various drawing tasks. |

|

Xiaofeng Guo, Guanqi He, Mohammadreza Mousaei, Junyi Geng, Guanya Shi, Sebastian Scherer International Conference on Robotics and Automation (ICRA), 2024 paper website TL;DR: We introduce a new aerial manipulation system that leverages tactile feedback for accurate contact force control and texture detection. |

|

|

|

|

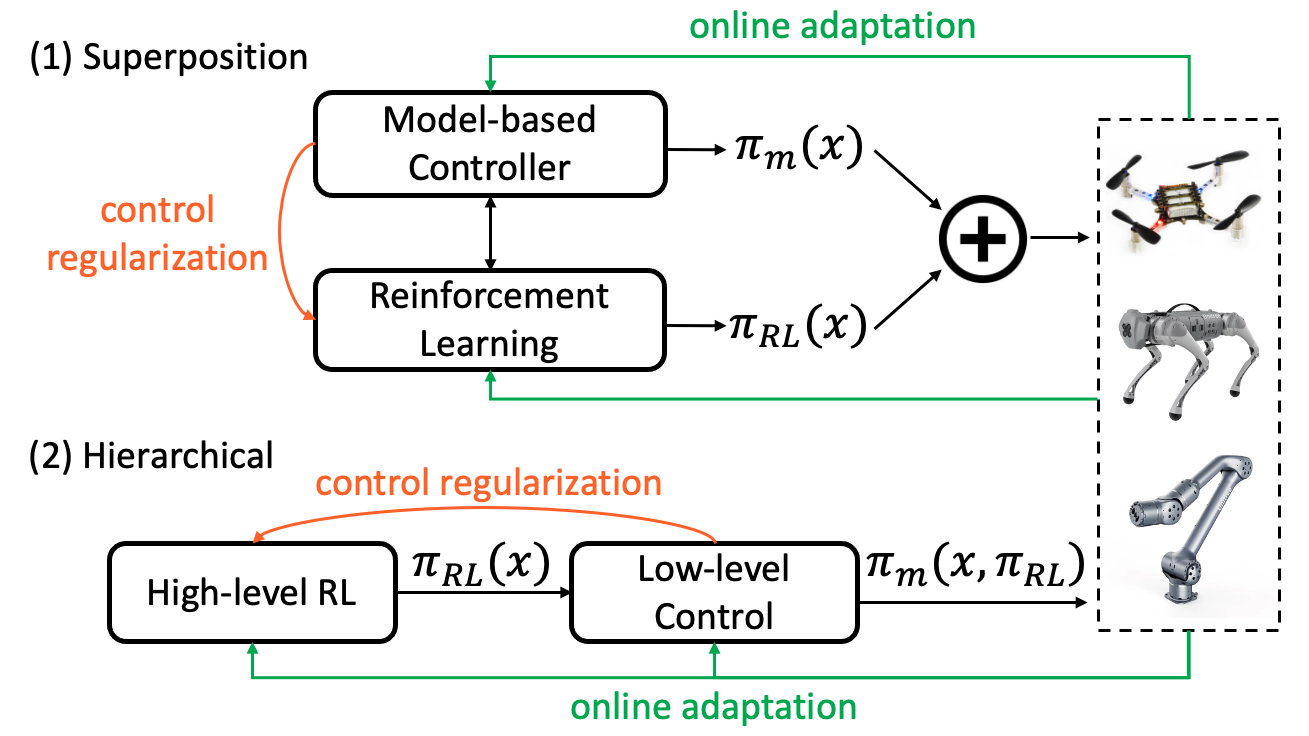

Most RL algorithms are general for all tasks. In contrast, drastically different control methods are developed for different systems/tasks, and their successes highly rely on structures inside these systems/tasks. We seek to encode these structures and algorithmic principles into black-box RL algorithms, to make RL algorithms more data-efficient, robust, interpretable, and safe. Examples include hierarchical RL and optimal control methods, learning safety filter for RL policies, and learning-based nonlinear control with stability guarantees. |

|

Tairan He*, Chong Zhang*, Wenli Xiao, Guanqi He, Changliu Liu, Guanya Shi Robotics: Science and Systems (RSS), 2024 (Outstanding Student Paper Award Finalist) paper website code IEEE Spectrum CMU NewsTL;DR: ABS enables fully onboard, agile (>3m/s), and collision-free locomotion for quadrupedal robots in cluttered environments. |

|

Yuxiang Yang, Guanya Shi, Changyi Lin, Xiangyun Meng, Rosario Scalise, Mateo Guaman Castro, Wenhao Yu, Tingnan Zhang, Ding Zhao, Jie Tan, Byron Boots Intertional Conference on Robotics and Automation (ICRA), 2025 paper website code TL;DR: Continuous, agile, and autonomous quadrupedal jumping via hierarchical model-free RL and model-based control. |

|

Guanqi He, Yogita Choudhary, Guanya Shi Intertional Conference on Robotics and Automation (ICRA), 2025 paper website code TL;DR: Pretrain a residual dynamics DNN using meta-learning and fine-tune the whole DNN online using adaptive control with stability guarantees. |